NEXT : I will carry on adding more info progressively in this article.

NEXT : I will explain how to get an effective Keynote that involves Julia White – Vice President Corporate Microsoft Azure worldwide.

NEXT : Our VISEO team will share as Open Source on GITHUB the code that is NOT under NDA.

INTRODUCTION

Context : During the Keynote of Julia White at Microsoft Experiences 2018(*), with Guilhem Villemin, I had the opportunity to illustrate Julia’s point regarding IoT, IoT Edge and AI = Intelligent Edge : https://www.viseo.com/fr/emea/actualites/viseo-au-microsoft-experiences-2018-compte-rendu(*) Largest European Microsoft event with 20000 people registered, over 150000 connected on live TV, plus many thousands on replay

I was acting as a Microsoft Regional Director (“RD program” known worldwide by Microsoft), and an Azure MVP, working as a FTE for VISEO.

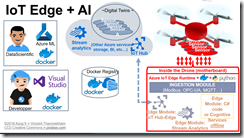

Architectural overview

Designed in 2017 and OpenSourced in Jan 2018 – for Scott Guthrie – RedShirt Tour Labs

OpenSourced Labs: https://github.com/azugfr/RedShirtTour-IoT-Edge-AI-Lab

To go deeper into the topic:

BOM – Bill of Material

- Team : You need a solid Rambo Team, that will succeed in due time no matter how hard the work is, and a great customer ALTAMETRIS (100% spin-of the SNCF railway), and Craftsman (well, here Craftswoman – Sacha Lhopital that code in a CleanCode manner and with AI expertise), and Artem Sheiko – who I am referring to during the Keynote, our Data Scientist.

- Probably about 20 people contributed to the entire project (started with IoT Edge in 2016)

- Vincent Thavonekham : Project Director & Speaker of the Keynote

- Vincent is awarded : Azure MVP

- Vincent is part of the Leader Visionary program - Microsoft RD (Regional Director) : https://rd.microsoft.com/en-us/about/

- Igor Leontiev : CSA Lead – Cloud Solution Architect | MVP Azure

- Artem Sheiko : DataScientist specialized on Azure BigData

- Sacha Lhopital : AI Dev & Azure IoT Dev ; https://www.meetup.com/fr-FR/MUGLyon/events/250854003/

- Christophe Creuseveau : Director IoT Dev Team – Grenoble and about 10 people in his team (months on Azure IoT Edge + weeks on Azure Cognitive Services) ==> MANY Thanks

- Industrial customer :

- Guilhem Villemin, CTO Altametris / #SCNF ; https://g-dev.fr/?page_id=10

- Alain Morice #SNCF : Senior Business Analyst and Project Leader and Novel ideas …

- and many other people who helped us, particularly my colleagues in Grenoble

- Massive amount of data

- Azure Subscription

- To orchestrate DevOps deployment of the various Docker containers : modules Azure IoT Hub > IoT Edge

- To centralize the source code : Azure DevOps CI/CD + GIT

- To run Azure Custom Vision : https://www.customvision.ai (free trial)

- DRONE : a real industrial drone running on Linux

- Either this one (worth > 1/2 million $) ; if you are one of the 10 people in the world who owns that monster. Or the one guy in France : Nicolas Pollet the CEO of ALTAMETRIS below.

- Or, the one on stage (worth > 20 000$ to 40 000$)

- Alternatively, use another drone without Linux, but add an external “box” running Linux in parallel (could a Raspberry PI, but your AI has to be very low CPU demanding), but you will not have the ability to get the Drone’s telemetry (coordinate, orientation, battery level, …)

- DevOps: for the DevOps aspect and continuous integration : The Gateway ADVANTECH IoT Box ARK-1124U that runs the same OS as the Drone

- ARK-1124U : http://www.advantech.com/products/1-2jkbyz/ark-1124u/mod_e4faa6eb-0cd1-4c55-b2d9-0dea6c8cbdf

- with Celeron dual Core (performance similar to ATOM Baytrail E3840 https://www.cpubenchmark.net/compare/Intel-Celeron-N3350-vs-Intel-Atom-E3840/2895vs2124)

- 4GB Ram

- SSD of 64Gb

- Module Wifi

- Details of the ADVANTECH gateway:

- Intel Celeron N3350 Dual Core SoC

- VGA Display/2x RS-232/422/485/4x USB 3.0

- 1 x Full-size mini PCIe with SIM holder for communication module

- 1x M.2 E-key for WIFI module

- -20 ~ 60 °C extended operating temperature

- TPM 2.0 default built-in

- 12V lockable DC jack power input

- Optional 12~24V power module (Dual Layer)

- Advantech iDoor module compatible (Dual Layer)

- Advantech WISE-PaaS/RMM support

ON THE EDGE SIDE : Microsoft IoT Edge

- Igor Leontiev created 3 ADVANTECH IoT Boxes :

- One for CI/CD DevOps, and advanced debugging tools and GUI

- Another one with a home-made version of a Linux distribution :

- no GUI Linux for sparing resources for the AI compute

- already packaged an IoT Edge SDK

- already packaged a Docker application

- A last box used as a Dev R&D box, to try many combinations of installations

- version of Linux,

- GUI vs no-GUI,

- Version of camera and tricky drivers for Linux combined with the fact that we are in a Docker Container

- Type of camera : USB vs. RJ45 vs. Wifi

- type of AI (many versions of TensorFlow, OpenCV …),

- Power needed in terms of CPU to run YOLO libraries,

- …

- Last but not least Igor also created a streaming server so that the OUTPUT of the camera is not the “barebone RAW” image captured, but a version enhanced with what the AI has been detected as object / defect. With possible output :

- VGA cable (via an tiny and fragile adapter from Drone=> VGA

- RJ45

- Wifi

For the demo, we used RJ45 as a plan A, and a VGA as plan B just in case.

By experience, the organizers of MS Experiences preferred to ban any Wifi. Luckily, we has 3 possibilities

VISEO & IMAGE ACQUISITION

Similarly to a real professional camera, it is not a regular camera that we are using, but a camera composed into two distinct pieces. One coming from Germany, and the other one imported from Japan.- An industrial Sensor, of brand iDS

- A professional lens

- All combined

- Installing the drivers – testing on Windows, then on Linux, then Docker+Linux !

- once deployed in the Drone

https://twitter.com/VISEOGroup/status/1060129485717209088

HOW TO CREATE THE AI “home-made” ?

This is another story that needs a white paper itself. Without disclosing any NDA information, be armed with :- Have a True DataScientific (i.e. not someone that vaguely did a maths and statistics

- Follow a very strict and robust model (used for over 20 years in Data Mining) => CRISP :

- ADAPT the CRISP to take into account the modern DevOps & Cloud approach.

As for us, build up from our AI+DevOps experience, we used a simplified version of TDSP : https://docs.microsoft.com/en-us/azure/machine-learning/team-data-science-process/team-data-science-process-for-devops - be armed with a lot of patience : hours, days, nights !!!

training, and re-training, and re-training, and re-training, and re-training, and re-training…. the AI model, up until you get an adequate one - some more hours, days, nights assessing the model on Linux – Here Artem Sheiko, the master chef of this AI

- And lots of coffee, next to a skeptical customer top expert in Railway field that will push us to the limits !!

APPENDICES

- Two other camera view angle :

- Focus Speakers: https://youtu.be/oaYe6maXrcg?t=3242

- Focus demo https://www.youtube.com/watch?v=S3ZPtwAPG8Q&t=92m28s&feature=youtu.be

- https://youtu.be/uC6skEWqgdg?t=91

- Hot reaction after the keynote : https://www.youtube.com/watch?v=5V3MqerziqY&feature=youtu.be

- Azure Logic Apps