The number of tools, either in CodePlex, in CTP, or MS released, or Third-party to manage the

Windows Azure are numerous. To add some more complexity, the very same tool (e.g. SQL Management Studio), allows you to perform the same action but using many possible ways. Thus the degree of freedom is huge. However, not ALL combination works or sometimes not documented at the moment, or you have to find a KB to understand why it does not work the way it should.

This post provides an easy way of migrating your on-premise database into SQL Azure, and use the latest Microsoft tools to have a better SQL ALM than ever before.

After having migrated your on-premise database, by completing the

first 4 steps from my

previous post (the other steps just provide a ways to check the integrity of your migration), you’ll need to create a DACPAC project. However, this time you’ll be using the following latest Microsoft tools:

*

SQL Server Data Tools [free], that extend your Visual Studio 2010 capabilities

http://msdn.microsoft.com/en-us/data/gg427686 (check all pre-requisites, which could take up to 1 hour of install). You could consider using Visual Studio 11 Beta… but only for SQL Azure / DACPAC projects (Indeed, Windows Azure bit is another story with VS11 Beta).

This extension allows to work with *.sql file in a better way, as we will see later.

.png)

*

SQL Server 2012 Management Studio [currently, get it SQL Server 2012 and uncheck everything, except Management Studio].

I would recommend not using SQL Management 2008 (unless you want to have some frustrations). indeed, with the latest version of Data-Tier Application projects it presents some minor limitations/ (bugs ? It’s a feature ?

) and its working principles has been ‘improved’ in “SQL Server 2012 Management Studio”.

To create a

new type of Data-tier Application project, called “SQL Server Database project” within Visual Studio 2010, follow step 5 to 7 of my previous post mentioned above (as a reminder, you need to install

SQL Server Data Tools).

You could also let “VS2010 with SQL Server Data Tools” migrate an existing VS2008 DB project:

In the new type of DB project’s property, you should target the “SQL Azure” platform, and set the Data-tier Application properties to a suitable version number (this number is used by the “intelligence” of DACPAC that is now handed by SQL Server 2008 R2 or 2012. We don’t deploy any longer “stupid” *.sql files).

It could be noted that by using this new type of DB project, you could decrease your amount of *.sql files considerably. For instance, going from 800 files to 100 files, depending on the complexity of your database. This is due to the fact that the new one is organized differently:

* No more huge amount of crazy sub-sub-sub-sub-folder and numerous files for every single index, constraints, etc… . Here is an example of a old VS2008 DB project applied to a very basic and small project (ASP.Net membership provider):

* Now, everything is nicely grouped into a more

“SQL-server-like” fashion way. On the top panel below

(1), you’ll find a

visual SQL designer of a Table with its associated constraints and properties, and on the bottom pan

(2), you’ll find the plain-text version like the old fashion way… except that your constraints, indexes, … are now part of the

same *.sql file.

The gain in the number of files also means less files to get/check-in and track in terms of source control.

You could notice also few variations in the “Build” options on the *.sql file’s properties

(3). However, unless setting additional deployment files to Build=

”None” or

“Build Extension Configuration” I did not manage to make it work properly (as in VS2008) when using related *.sql files called from a master *.sql file using the “:r” option.

With this type of project, by targeting “SQL Azure”, which represents the most constraining scenario (because your T-SQL has to be cleaned-up), you can publish either On-Premise or on SQL Azure

using the very same T-SQL (you might add some IFs if you really need specific SQL instructions on SQL Azure).

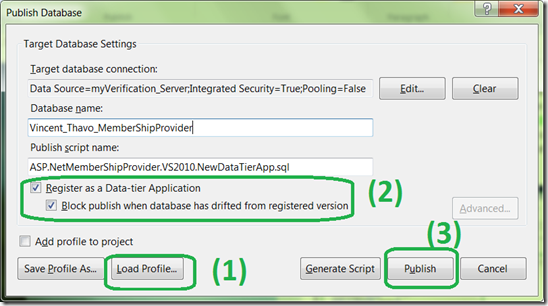

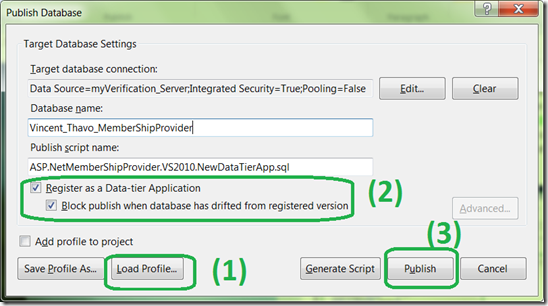

As far as Publishing is concerned, you could define a configuration file per environment (e.g. click

(1) to load various profiles, such as on-premise staging,on-premise validation, localhost, SQL Azure staging, SQL Azure PROD, …). To get the new publish window (notice it is not called “deploy” anymore), right click on your VS DB project > “Publish…”, somehow wait for 2 to 10 seconds before the modal pop-up appears:

Ensure you have checked the 2 options

(2) to benefits from the latest DACPAC type of deployment, and simply click

(3) [which also means you could deploy the old fashion way by configuring the numerous options on

Advanced…].

During the publishing, you’ll see a new output window that keeps it nice and tidy as opposed to the previous plain text version. It reminds me the Publish for Windows Azure, … but in a more usable way ! (i.e. no tiny reports with a tiny scrollbar and when a bugs occur, you don’t know what happens). I like this new

“Data Tools Operations” output window :

- it has many “view details” links to open a full-size window when needed

- it could be collapsed (such as Win Azure deployment)

-…

. It also

seams to deploy/publish and generate data a lot faster that its previous version (may be due to better caching).

Now if you want to deploys into SQL Azure, simply load the corresponding config file, then here you go, it’s on SQL Azure in 1 click ! Now you could use a command line to perform that so that a TFS-Build could automatically publish that for you (I would not recommend, since it will start to impact considerably you Windows Azure bill if you publish continuously 10 or 20 times a day for instance).

Example of deployment time a database with less that 100 MB data to be populated

- on local DB : 5 sec

- on SQL Azure (broadband): 4 min

- on SQL Azure (USB Dungle Internet, and no broadband): 15 min

The last new feature that I am going to present to really ease your ALM is the “Snapshots” possibilities. Before any Staging or Prod deployment, keep track of the

exact deployment package that you delivered and stores it as a Snapshot. It will store you project as a DACPAC file within seconds (recall: a DACPAC file is a Zip file of you DB schema stored as XML files), which should then be stored into you favorite code repository. In TFS, for example, it could be a TFS-Release-Branch.

The new possibilities allows a huge gain in productivity !!!

The new possibilities allows a huge gain in productivity !!!

Although I have been using those tools on large projects, I would be really interested in anyone having further practical feedbacks, or may be different usage.

.png)